Logistic Regression with Single Predictor in R

In this post, we will learn how to perform a simple logistic regression using Generalized Linear Models (glm) in R. We will work with logistic regression model between a binary categorical variable as response variable and a single numerical predictor.

Data for logistic regression

Let us load the packages needed.

library(tidyverse)

library(palmerpenguins)

theme_set(theme_bw(16))We will use two variables from Palmer penguins dataset. To simplify let us use dataset from one of the Penguin species and select just two variables, sex and body mass. Here, sex is a categorial variable and body mass is numerical variable.

df %

drop_na() %>%

filter(species=="Gentoo") %>%

select(sex, body_mass_g)df %>% head()

# A tibble: 6 × 2

sex body_mass_g

1 female 4500

2 male 5700

3 female 4450

4 male 5700

5 male 5400

6 female 4550Variables for logistic regression

In this logistical regression model, we are interested in classifying the sex of penguins using body mass. Let us make a simple boxplot to see how well sex variable is associated with body mass of penguins.

df %>%

ggplot(aes(x=sex, y= body_mass_g, color=sex))+

geom_boxplot(outlier.shape = NA)+

geom_jitter(width=0.1)+

theme(legend.position = "none")+

scale_color_brewer(palette="Set1")

ggsave("penguins_sex_vs_body_mass_boxplot.png") Logistic regression to classify Penguin sex with one predictor ### Building Logistic Regression with glm()

Logistic regression to classify Penguin sex with one predictor ### Building Logistic Regression with glm()

To build logistic regression model we will use glm() function from stats package that has implementations of GLMs. glm() function takes three key arguments,

formula, where we tell glm() the variables and how we want to model family name, here we specify the exponential family that we want to use, “biomial” for logistic regression data: a dataframe with all the variables specified in the formula

# single predictor

glm_res_sp %

glm(formula = sex ~ body_mass_g,

family = binomial)By printing the resulting object, we can see the basic information regarding the model we built. We get the formula we used and the coeffients from the logistic regression model.

glm_res_sp

Call: glm(formula = sex ~ body_mass_g, family = binomial, data = .)

Coefficients:

(Intercept) body_mass_g

-55.03337 0.01089

Degrees of Freedom: 118 Total (i.e. Null); 117 Residual

Null Deviance: 164.9

Residual Deviance: 45.1 AIC: 49.1Results from Logistic Regression with single predictor uslng glm()

To fully investigate the results, we need to use summary() function on the result object. And this gives us the estimated coefficients and the p-value of the association between the two variables. We can see that

glm_res_sp %>% summary()Call:

glm(formula = sex ~ body_mass_g, family = binomial, data = .)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -55.033367 11.651375 -4.723 2.32e-06 ***

body_mass_g 0.010888 0.002311 4.711 2.47e-06 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 164.893 on 118 degrees of freedom

Residual deviance: 45.105 on 117 degrees of freedom

AIC: 49.105

Number of Fisher Scoring iterations: 7Cleaning Logistic Regression results with broom

The results from statistical models like glm() are often hard to read. So we will use broom package’s tidy() function to convert the resulting statistical object to a tidy dataframe.

glm_res_sp %>%

tidy()

# A tibble: 2 × 5

term estimate std.error statistic p.value

1 (Intercept) -55.0 11.7 -4.72 0.00000232

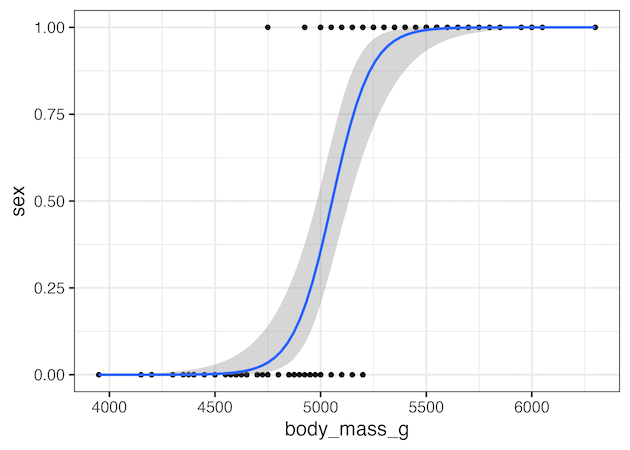

2 body_mass_g 0.0109 0.00231 4.71 0.00000247We can also visualize the logistic regression model by plotting the two variables and a logistic curve. Note here we use ggplot2’s geom_smooth() function to make the plot.

df %>%

mutate(sex=as.numeric(sex)-1) %>%

ggplot(aes(x=body_mass_g, y=sex)) +

geom_point() +

geom_smooth(method="glm",

se=TRUE,

method.args = list(family=binomial))

ggsave("plotting_logistic_regression_model_with_glm.png") Plotting logistic regression model with geom_smooth()

Plotting logistic regression model with geom_smooth()